Introduction

An arithmetic calculator (of 4 operations) may be considered the simplest form of task automation when we think of the vast capability of modern GPL, such as python, C’s, R and javascript, for instance.

This functionality can be effortlessly implemented without the help of machine learning, if only we know the axiom of arithmetic, while we are aware of the assumption is conflicting in the core of modern training loop, where such fundamental calculation will be invoked anyway.

I wrote this article not for a rigorous exposition of scientific showcase but for the implied pedagogic significance along with some practical knowledge in machine learning, summarized as below.

- practically, it is not effective to train model that is aligned to output exact answer to each inputs, if the algorithm is already known;

- addition and subtraction can be naively well trained by classical ML methods baselessly up to

(1e-6)or better in R2 score (c.f.1e-2or better in pointwise difference, if . This measure depends on the range of training data, set to

. This measure depends on the range of training data, set to [-100,100]for this experiment).

Here is the questionary on which our experimental architecture is rooted.

What a trained model will answer to the question: “what is the result of

?”, if the model merely observed a sequence of randomly generated pairs of integers calculated by given operator

, where

and

?[1]c.f. operators in LISP.

Setup

Architectures

The model architectures are as following.

- Note that

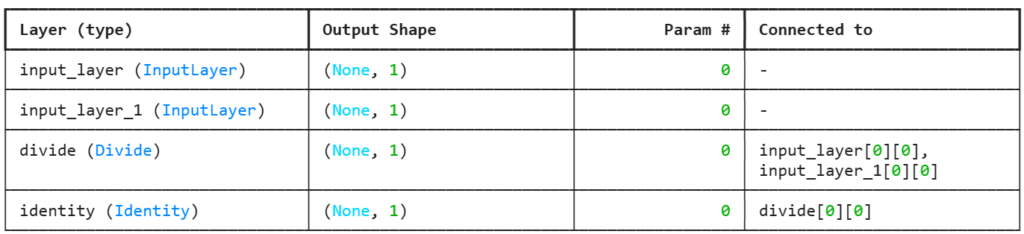

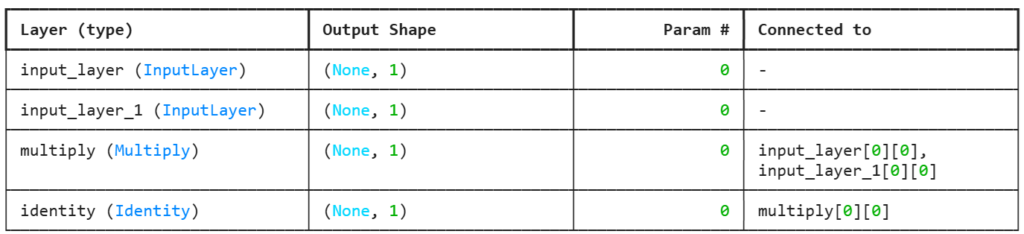

sigmoidactivation layer follows the first dense layer, in addition and subtraction model; - At first we employed similar and/or expansive architectures for multiplication and division as other two, failed at attaining acceptable pointwise precision;

- We ended up using layers with no trainable parameters, keras.Layers.Multiply and custom layer

Dividefor multiplication and division models respectively, defined by

class Divide(Layer):

def __init__(self, **kwargs):

super(Divide, self).__init__(**kwargs)

def build(self, input_shape):

super(Divide, self).build(input_shape)

def call(self, x):

# Dividing Input

return tf.divide(x[0], x[1])

def compute_output_shape(self, input_shape):

return (None, 1)Outcome

We published model files ( *.keras ) and working demo in here (Hugging Face).

As the nature of task implied, the model with numeric output under a known formula [2]i.e. exact numeric ground-truth is provided. seemingly always agonize overfitting due to the required level of precision, where addition and subtraction could be regarded as atypical.

Therefore next questionary may be — what type, and what composition of types of functions will be well-trainable, in the sense that relatively higher precision will be attainable.